Abstract

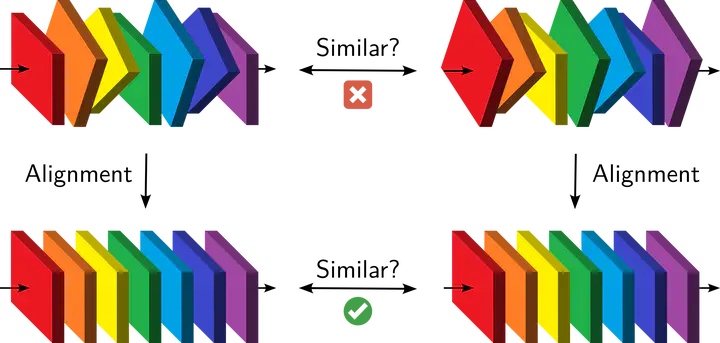

What do neural networks learn? A major difficulty is that every training run results in a different set of weights but nevertheless leads to the same performance. We introduce a model of the probability distribution of these weights. Layers are not independent, but their dependencies can be captured by an alignment procedure. We use this model to show that networks learn the same features no matter their initialization. We also compress trained weights to a reduced set of summary statistics, from which a family of networks with equivalent performance can be reconstructed.

Type

Publication

ArXiv